1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

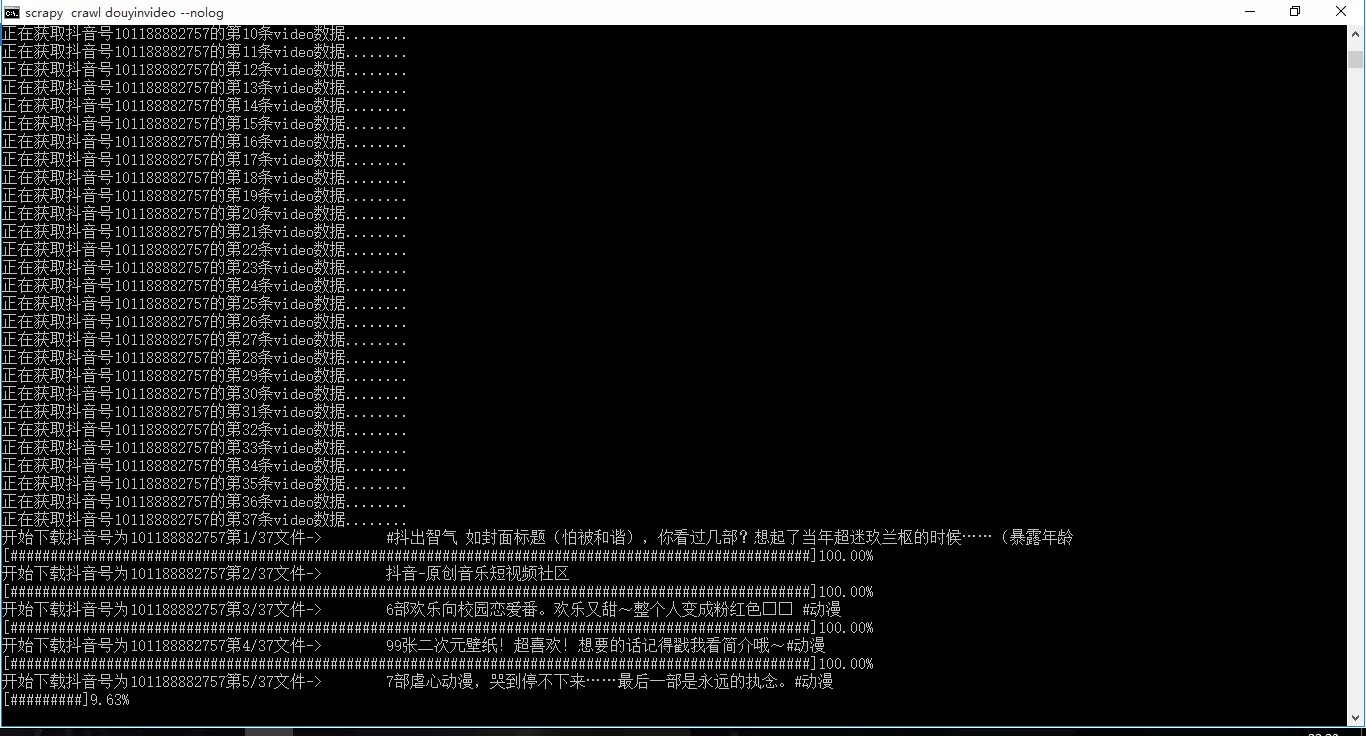

| import scrapy

import requests

import re

import os

import json

from scrapy.utils.project import get_project_settings

class DouyinvideoSpider(scrapy.Spider):

name = 'douyinvideo'

allowed_domains = ['http://v.douyin.com','https://www.iesdouyin.com','https://www.amemv.com','http://*.ixigua.com',]

# get_json_url = "https://www.amemv.com/aweme/v1/aweme/post/?{0}"#获取json数据的链接,有的链接已经失效。

# new_ie_url = "https://www.douyin.com/aweme/v1/aweme/favorite/?{0}"

new_ie_url = "https://www.iesdouyin.com/aweme/v1/aweme/post/?{0}"

# url = "http://v.douyin.com/dXUNSp/"#有的人说短链接会重定向到长链接,但scrapy会自动重定向的

url_num = 1 # 定位url的数目

start_urls = []

url_file = open("url_list.txt", 'rU') # 取文件中的第一个url

try:

for line in url_file:

start_urls.append(line.rstrip('\n'))

break

finally:

url_file.close()

uid = None

dytk = None

video_link_arr = []

video_title_arr = []

def_max_cursor = '0'

_signature = ''

video_num = 1

def parse(self, response):

self.uid = None

self.dytk = None

self.video_link_arr = []

self.video_title_arr = []

self.def_max_cursor = '0'

self._signature = ''

self.video_num = 1

self.uid = str(re.findall('uid: "(.*)"',response.text)[0])

self.dytk = re.findall("dytk: '(.*)'",response.text)

self._signature = self.get_signature(self.uid)

parms = self.get_parms(self.uid,self._signature,self.dytk[0],self.def_max_cursor)

new_url = self.get_newurl(self.new_ie_url,parms)

yield response.follow(new_url,callback = self.get_json,dont_filter=True,headers = get_project_settings().get('DEFAULT_REQUEST_HEADERS'))#filter=True 不去重/第一次探测start_url是否失效

def get_json(self,response):

_json = json.loads(response.text)

if not _json['aweme_list']:

parms = self.get_parms(self.uid, self._signature, self.dytk[0],self.def_max_cursor)

new_url = self.get_newurl(self.new_ie_url, parms)

yield response.follow(new_url,callback = self.get_json,dont_filter=True,headers = get_project_settings().get('DEFAULT_REQUEST_HEADERS'))#死循环访问链接,测试中总有一次会返回正确的json数据

else:

#获取到了第一批json数据

for _jsons in _json['aweme_list']:

print("正在获取抖音号%s的第%s条video数据........"%(self.uid,self.video_num))

self.video_num += 1

self.video_title_arr.append(_jsons['share_info']['share_desc'])

true_file_link = self.true_file_link(_jsons['video']['play_addr']['url_list'][0])

self.video_link_arr.append(true_file_link)

if _json['has_more']:

#翻页视频

self.def_max_cursor = str(_json['max_cursor'])

parms = self.get_parms(self.uid,self._signature,self.dytk[0],self.def_max_cursor)

new_url = self.get_newurl(self.new_ie_url, parms)

yield response.follow(new_url, callback=self.get_json, dont_filter=True,headers=get_project_settings().get('DEFAULT_REQUEST_HEADERS'))

else:#提交视频链接以及标题和用户id

yield {

'user_id':self.uid,

'file_name':self.video_title_arr,

'file_link':self.video_link_arr

}

next_url = self.next_url()

if next_url:

yield response.follow(next_url, callback=self.parse, dont_filter=True,headers=get_project_settings().get('DEFAULT_REQUEST_HEADERS'))

else:

pass

def get_signature(self,user_id):#获取signature参数

user_id = str(user_id)

_signature = os.popen('node _signature.js %s' % user_id)

_signature = _signature.readlines()[0]

_signature = _signature.replace("\n", "")

_signature = str(_signature)

return _signature

def get_parms(self,user_id,_signature,dytk,max_cursor):#获取链接参数表

parms = { # 参数字典

'user_id': user_id,

'count': '21',

'max_cursor': max_cursor,

'aid': '1128',

'_signature': _signature,

'dytk': dytk

}

return parms

def get_newurl(self,url,parms):#url组合

new_url = url.format('&'.join([Key + '=' + parms[Key] for Key in parms]))

return new_url

def true_file_link(self,url):#解决video的跳转链接

r = requests.get(url,headers = get_project_settings().get('DEFAULT_REQUEST_HEADERS'),allow_redirects = False)

return r.headers['Location']

def next_url(self):# 取一条没有被爬取过的链接

url_file = open("url_list.txt", 'rU')

try:

for (line,num) in zip(url_file,range(self.url_num+1)):

if num == self.url_num:

self.url_num += 1

return line.rstrip('\n')

else:

continue

finally:

url_file.close()

|